Building a Multi-Tenancy Platform with Capsule and vCluster - Hard vs Soft Isolation

I’ve been running multi-tenant Kubernetes platforms for years, and the conversation always comes down to the same question: how isolated do tenants really need to be? The answer determines whether you’re managing one shared cluster or a hundred separate ones, and it has massive implications for cost, complexity, and operational overhead.

The industry has two emerging answers: Capsule (a CNCF sandbox project) for namespace-based soft multi-tenancy, and vCluster (an open-source project by Loft Labs) for virtual cluster hard isolation. Both solve real problems. But they take fundamentally different approaches.

In this post, I’ll walk through both tools based on actual production experience. We’ll look at the architecture, compare the trade-offs, and work through real implementation examples. By the end, you’ll know which tool fits your use case or whether you need both.

The Multi-Tenancy Problem Nobody Talks About

Running separate Kubernetes clusters per team sounds clean on paper. Complete isolation. No noisy neighbors. No shared blast radius. But here’s what happens in practice:

You start with 5 teams. Each gets their own AKS cluster. That’s 5 clusters to patch, 5 sets of add-ons to maintain, 5 node pools to scale, 5 monitoring stacks to configure. Within six months, you’re at 20 teams. Within a year, you’re managing 50+ clusters, and your platform team is drowning in operational toil.

The alternative, cramming everyone into one cluster with basic namespaces and RBAC, works until it doesn’t. A developer in the analytics team deploys a memory leak that starves the production API. Someone installs a cluster-scoped CRD that conflicts with another team’s operator. Network policies become an unmanageable mess. You’re firefighting noisy neighbor issues weekly.

Both extremes fail at scale. What you actually need is configurable isolation that matches the trust boundary and risk profile of each tenant. Internal dev teams don’t need the same isolation as external customers. Compliance workloads have different requirements than sandbox environments.

That’s the gap Capsule and vCluster fill.

Understanding the Isolation Spectrum

Before diving into tools, let’s clarify the isolation models:

| Isolation Level | Implementation | Trust Model | Cost | Ops Overhead |

|---|---|---|---|---|

| None | Shared namespaces | Single team | Lowest | Minimal |

| Soft | Namespace + RBAC + policies | Internal teams | Low | Low |

| Medium | Capsule tenants | Cross-team, same org | Low-Medium | Low |

| Hard | vCluster (virtual clusters) | External customers | Medium | Medium |

| Complete | Separate physical clusters | Zero trust / compliance | Highest | High |

Most organizations need a mix. Your internal dev teams can share soft-isolated tenants. Your SaaS customers need hard isolation. Compliance workloads might require complete separation.

The trick is having the tooling to support this without managing infrastructure chaos.

Capsule - Namespace-Based Multi-Tenancy Done Right

Capsule is a CNCF sandbox project that extends Kubernetes multi-tenancy using custom resources and admission webhooks. Think of it as a way to group namespaces into logical “tenants” and enforce policies, quotas, and isolation at that tenant boundary.

How Capsule Works

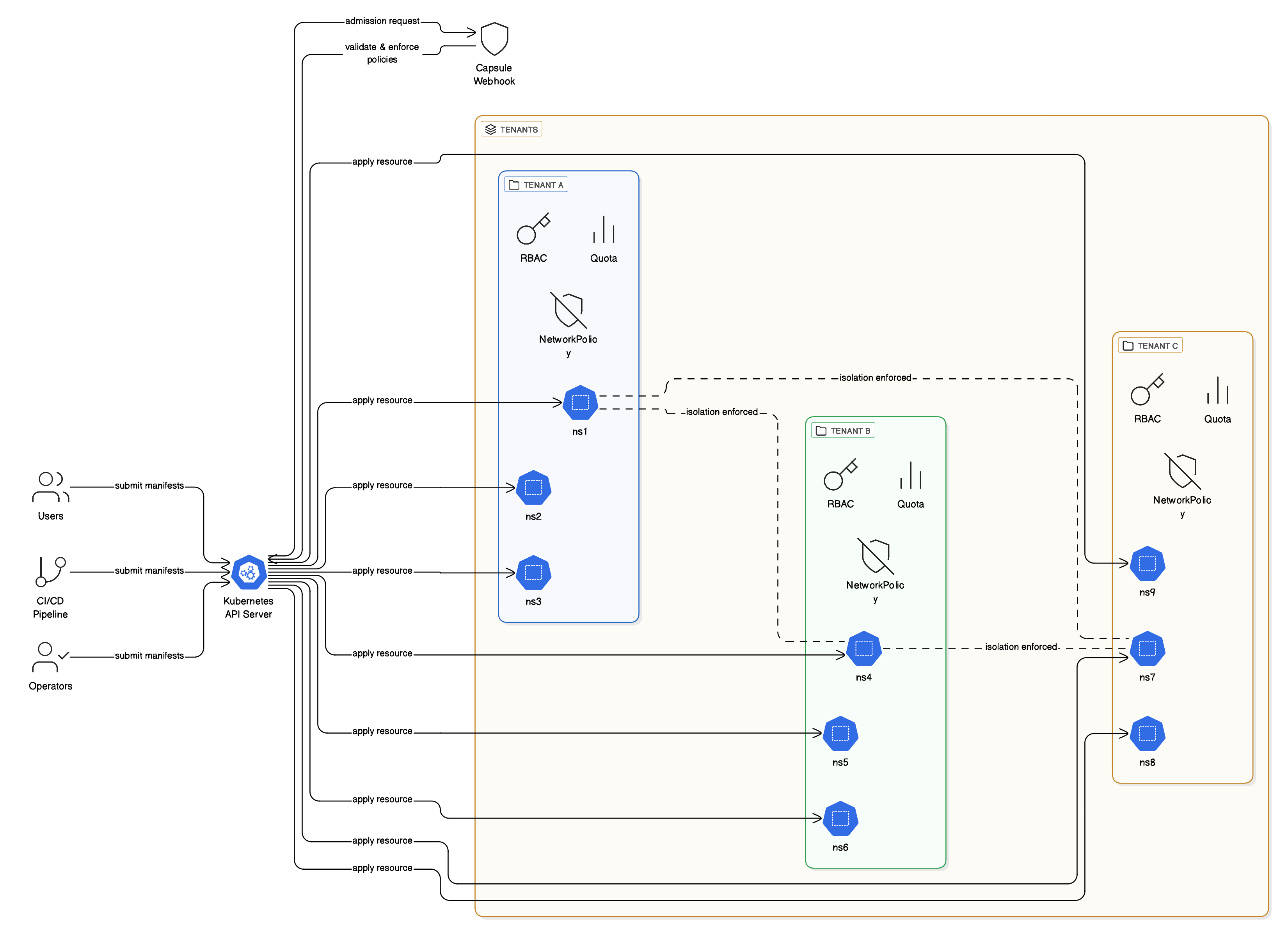

Capsule introduces a Tenant CRD that acts as a higher-level abstraction above namespaces. Each tenant has owners (users or groups), and those owners can create and manage namespaces within their tenant scope, but they’re restricted by the policies you define.

Here’s the architecture:

When a tenant owner tries to create a namespace or resource, Capsule’s admission webhook intercepts the request and validates it against tenant policies. If it passes, the resource is created with automatic labels, annotations, quotas, and network policies applied.

Why Capsule Works for Internal Teams

The beauty of Capsule is it uses native Kubernetes primitives. There’s no custom API server, no virtualization layer, no syncing complexity. It’s just policy enforcement on top of standard Kubernetes RBAC.

This means:

- Low overhead: A single webhook pod, minimal resource consumption

- Fast onboarding: Creating a tenant takes seconds

- Native tooling: kubectl, Helm, and existing tools work out of the box

- Centralized governance: Platform team controls policies, tenants self-serve within guardrails

A scalable multi-tenant Kubernetes setup can be achieved using Capsule as the core of the tenancy model. In such an architecture, each engineering team would have its own tenant, created automatically through Backstage templates. Tenants can be provisioned with predefined quotas, network policies, and ingress rules, ensuring consistent governance across the platform.

With this approach, teams can create and manage namespaces on demand, without filing tickets or waiting for manual approvals. Capsule enforces isolation and policy boundaries, while Backstage provides a seamless self-service interface. The result is a controlled yet flexible environment that supports dozens of teams while keeping operations lightweight and compliant.

Capsule Installation

Installing Capsule is straightforward with Helm:

1

2

3

4

5

6

7

helm repo add projectcapsule https://projectcapsule.github.io/charts

helm repo update projectcapsule

helm install capsule projectcapsule/capsule \

--namespace capsule-system \

--create-namespace \

--set "manager.options.forceTenantPrefix=true"

The forceTenantPrefix option ensures all namespaces created by tenants are prefixed with the tenant name, preventing naming conflicts.

Creating Your First Tenant

Here’s a production-ready tenant definition for an engineering team:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: platform-engineering

spec:

# Tenant owners (can create/manage namespaces in this tenant)

owners:

- name: platform-team

kind: Group

# Limit to 5 namespaces per tenant

namespaceOptions:

quota: 5

# Container resource limits (enforced via admission webhook)

# Note: These are NOT created as LimitRange resources in namespaces

# Capsule webhooks enforce these limits when pods are created

limitRanges:

items:

- limits:

- type: Container

default:

cpu: "1"

memory: "1Gi"

defaultRequest:

cpu: "100m"

memory: "128Mi"

max:

cpu: "4"

memory: "8Gi"

# Tenant-scoped resource quotas (aggregate across ALL namespaces in this tenant)

# Note: These are NOT per-namespace quotas - they sum all resources across the tenant

# With scope: Tenant, the limits apply to the entire tenant, not individual namespaces

resourceQuotas:

items:

- hard:

limits.cpu: "40" # Total across all namespaces

limits.memory: "80Gi" # Total across all namespaces

requests.storage: "500Gi"

persistentvolumeclaims: "20"

pods: "200" # Total pods across all namespaces

# Network isolation - only allow traffic within tenant

networkPolicies:

items:

- policyTypes:

- Ingress

- Egress

podSelector: {}

ingress:

- from:

- namespaceSelector:

matchLabels:

capsule.clastix.io/tenant: platform-engineering

egress:

- to:

- namespaceSelector:

matchLabels:

capsule.clastix.io/tenant: platform-engineering

- to: # Allow DNS

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: kube-system

ports:

- protocol: UDP

port: 53

- to: # Allow external HTTPS

- podSelector: {}

ports:

- protocol: TCP

port: 443

# Restrict ingress hostnames to tenant-specific pattern

ingressOptions:

allowedHostnames:

allowedRegex: ".*\\.platform-eng\\.example\\.com$"

# Only allow specific storage classes

storageClasses:

allowed:

- managed-premium

- azurefile

# Restrict container registries

# Note: Use registries that match the full image path or use short image names

# "docker.io/library" pattern requires short names like "nginx:latest"

# For full paths, use registries like ghcr.io, quay.io, or ACR

containerRegistries:

allowed:

- "mycompany.azurecr.io"

- "ghcr.io"

- "quay.io"

This tenant gives the platform team:

- Up to 5 namespaces

- 40 total CPU cores and 80Gi memory (aggregate across all 5 namespaces)

- 200 total pods across all namespaces

- Network isolation enforced via webhooks (only internal tenant traffic + DNS + outbound HTTPS)

- Ingress only on

*.platform-eng.example.com - Restricted to approved storage classes and container registries

- Automatic RBAC with admin access in their namespaces

Once created, tenant owners can self-service namespaces:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# As a tenant owner

kubectl create namespace platform-eng-prod

# Capsule automatically:

# - Adds tenant label: capsule.clastix.io/tenant=platform-engineering

# - Creates RoleBindings for tenant owners (admin, capsule-namespace-deleter)

# - Injects node selectors into pods (via mutating webhook)

# - Enforces policies via admission webhooks:

# * Container registry restrictions

# * Resource limit ranges

# * Ingress hostname validation

# * Storage class restrictions

#

# Note: ResourceQuotas are tenant-scoped (aggregate), not per-namespace

# NetworkPolicies and LimitRanges are enforced at admission time, not created as resources

Advanced Capsule Features

Node Selectors: Force tenant workloads onto specific node pools:

1

2

3

4

spec:

nodeSelector:

workload-type: "cpu-intensive"

environment: "production"

Service Account Annotations: Auto-inject metadata on all resources:

1

2

3

4

5

6

7

8

9

spec:

serviceOptions:

additionalMetadata:

annotations:

prometheus.io/scrape: "true"

security.scan/enabled: "true"

labels:

tenant: platform-engineering

cost-center: "engineering"

Priority Classes: Restrict which priority classes tenants can use:

1

2

3

4

5

6

spec:

priorityClasses:

allowed:

- tenant-low

- tenant-medium

# Block tenant-high and system priorities

Real-World Capsule Use Case

Imagine you need to give 10 internal teams self-service access to dev, staging, and production environments. Before Capsule, this meant:

- Manual namespace creation

- Copy-paste RBAC configs (error-prone)

- Network policies managed in a monolithic file (merge conflicts)

- No enforcement of quotas or naming conventions

With Capsule:

- Backstage template creates tenant in 10 seconds

- Teams create namespaces on-demand via kubectl or UI

- All policies automatically applied

- Platform team updates tenant CRD to adjust quotas/policies

- Zero tickets filed for namespace requests

We went from spending 2-3 hours per week on namespace management to zero. That time went into platform improvements instead.

vCluster - Virtual Kubernetes Clusters

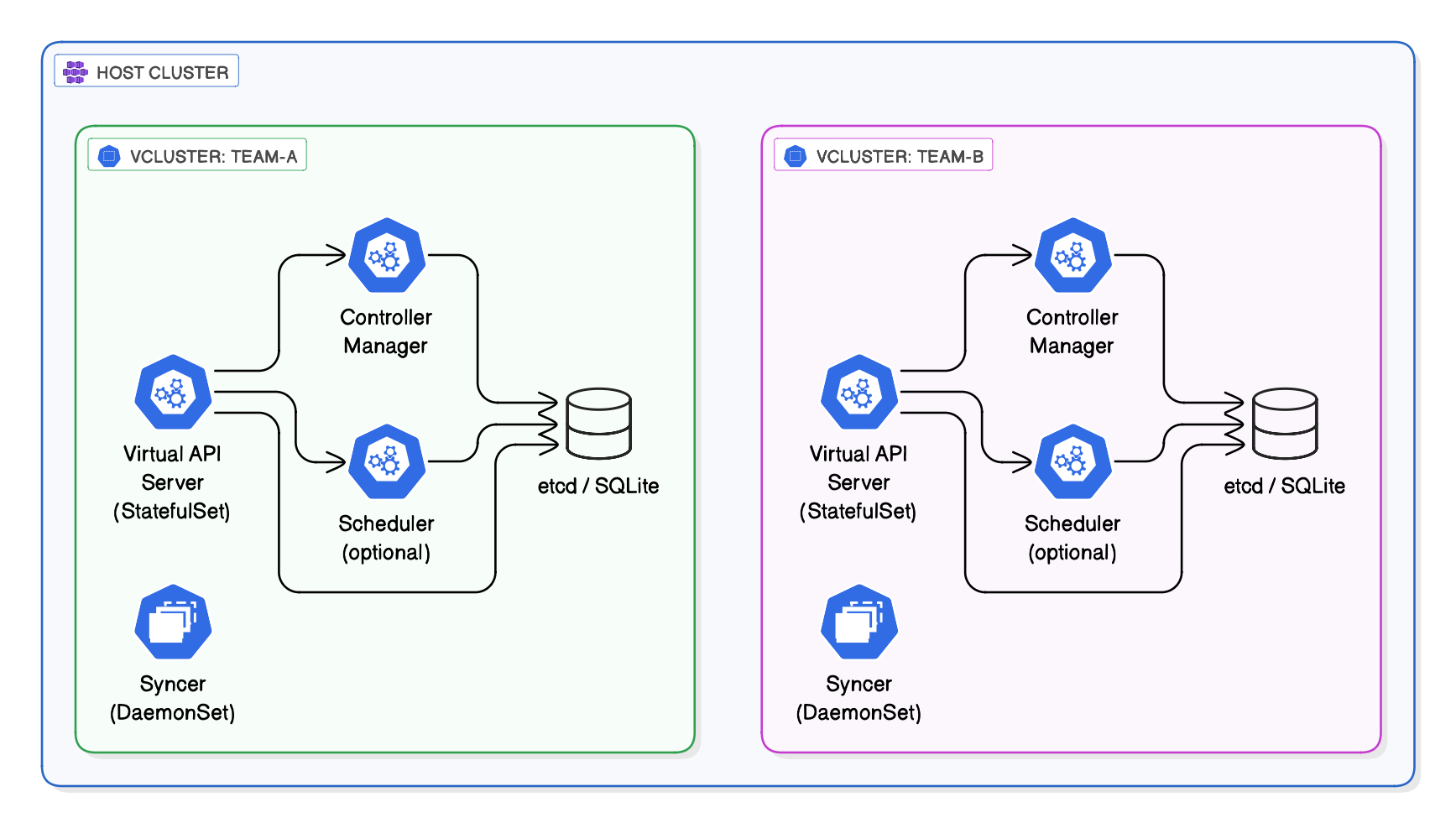

vCluster takes a completely different approach. Instead of namespaces with policies, it runs full Kubernetes control planes inside your host cluster. Each tenant gets their own virtual cluster with a dedicated API server, controller manager, and scheduler.

This is hard multi-tenancy. Each tenant has cluster-admin access to their virtual cluster. They can install CRDs, create cluster-scoped resources, and use any Kubernetes version they want — all without affecting other tenants or the host cluster.

How vCluster Works

vCluster uses a clever architecture:

When a user runs kubectl against a vCluster:

- Request hits the virtual API server (running in a pod on the host)

- Virtual API server stores state in SQLite/etcd (in the same pod)

- When resources are scheduled (Pods, Services), the syncer mirrors them to the host cluster

- Host cluster actually runs the pods (on real nodes)

- Virtual cluster shows the pods as running in its own context

From the tenant’s perspective, it’s a real Kubernetes cluster. From the host cluster’s perspective, it’s just pods in a namespace.

Why vCluster Works for External Customers

The isolation model is fundamentally different from Capsule:

| Aspect | Capsule | vCluster |

|---|---|---|

| API Server | Shared | Isolated per tenant |

| RBAC | Namespace-level | Cluster-admin in virtual cluster |

| CRDs | Shared (conflicts possible) | Isolated per tenant |

| Kubernetes Version | Same as host | Can differ per tenant |

| Cluster-Scoped Resources | Restricted | Full access (virtualized) |

This makes vCluster ideal when:

- Tenants need cluster-admin privileges

- Tenants install operators with CRDs

- Different Kubernetes versions are required

- External customers expect “their own cluster”

- Compliance requires API server isolation

I’ve seen vCluster used in SaaS platforms where each enterprise customer gets a dedicated virtual cluster. The customer gets full cluster access and can deploy Helm charts, operators, and tools exactly as if they had their own cluster — but the SaaS provider only manages a handful of host clusters instead of hundreds.

vCluster Installation

You can install vCluster via CLI or Helm. The CLI is the fastest way to get started:

1

2

3

4

5

6

7

8

9

# Install vCluster CLI

curl -L -o vcluster "https://github.com/loft-sh/vcluster/releases/latest/download/vcluster-linux-amd64"

chmod +x vcluster

sudo mv vcluster /usr/local/bin

# Create a virtual cluster

vcluster create dev-team \

--namespace team-dev \

--connect=false # Don't auto-connect kubeconfig

This creates a virtual cluster named dev-team in the team-dev namespace on your host cluster. To connect to it:

1

2

3

4

5

vcluster connect dev-team --namespace team-dev

# Now kubectl targets the virtual cluster

kubectl get nodes # Shows virtual nodes

kubectl get namespaces # Independent from host cluster

Production vCluster Configuration

For production, use Helm with a values file for better control:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

# vcluster-values.yaml

vcluster:

image: rancher/k3s:v1.29.0-k3s1 # Use K3s for lightweight control plane

# Resource limits for control plane

resources:

limits:

cpu: "2"

memory: "4Gi"

requests:

cpu: "200m"

memory: "512Mi"

# Persistent storage for cluster state

storage:

persistence: true

size: 10Gi

className: managed-premium

# Enable CoreDNS in virtual cluster

coredns:

enabled: true

# Syncer configuration (what gets mirrored to host)

sync:

toHost:

pods:

enabled: true

services:

enabled: true

endpoints:

enabled: true

persistentVolumeClaims:

enabled: true

ingresses:

enabled: true

secrets:

enabled: true # Sync secrets needed by pods

fromHost:

nodes:

enabled: true # Virtual cluster sees host nodes

selector:

all: true

storageClasses:

enabled: true # Use host storage classes

# High availability (optional)

syncer:

replicas: 3 # Multiple syncer instances

# Expose via LoadBalancer (for external access)

service:

type: LoadBalancer

Deploy with Helm:

1

2

3

4

5

6

7

helm repo add loft https://charts.loft.sh

helm repo update

helm install customer-a loft/vcluster \

--namespace vcluster-customer-a \

--create-namespace \

--values vcluster-values.yaml

vCluster with Vanilla Kubernetes (Not K3s)

By default, vCluster uses K3s for the control plane (lightweight). For compatibility with tools that expect full Kubernetes, use the vanilla K8s distribution:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# vcluster-k8s-values.yaml

controlPlane:

distro: k8s # Use full Kubernetes instead of K3s

backingStore:

etcd:

deploy:

enabled: true # Deploy real etcd instead of SQLite

statefulSet:

resources:

requests:

cpu: 200m

memory: 512Mi

# Enable high availability with multiple API servers

statefulSet:

highAvailability:

replicas: 3 # 3 API server replicas

This gives you a production-grade Kubernetes control plane, but at higher resource cost (~1GB RAM per control plane).

Connecting to vCluster from CI/CD

You can extract the kubeconfig for a vCluster to use in GitOps or CI:

1

2

3

4

5

6

7

8

9

10

11

# Get kubeconfig for vCluster

vcluster connect customer-a \

--namespace vcluster-customer-a \

--server https://customer-a.vclusters.example.com \

--update-current=false \

--print > customer-a-kubeconfig.yaml

# Use in ArgoCD

kubectl create secret generic customer-a-cluster \

--from-file=config=customer-a-kubeconfig.yaml \

-n argocd

Now ArgoCD can deploy to the vCluster as if it were a separate cluster.

Real-World vCluster Use Case

I worked with a SaaS platform that offered “managed Kubernetes” to enterprise customers. Each customer expected:

- Full cluster-admin access

- Ability to install operators (Prometheus, Istio, etc.)

- Custom CRDs for their internal tools

- Kubernetes version of their choice (some on 1.27, others on 1.29)

Initially, they gave each customer a dedicated AKS cluster. With 50 customers, they were managing 50 clusters — patching, upgrading, monitoring, and billing separately. Operational costs were unsustainable.

With vCluster:

- Run 5 large AKS host clusters (one per region)

- Each customer gets a vCluster on the regional host

- Customers get full cluster-admin in their vCluster

- Platform team manages 5 clusters instead of 50

- Reduced infra costs by 60%, reduced ops overhead by 70%

Customers were happy (got their own “cluster”), and the platform team was happy (manageable infrastructure).

Capsule vs vCluster - The Decision Matrix

Here’s how I think about choosing between them:

| Factor | Capsule | vCluster |

|---|---|---|

| Tenant Trust Level | High (internal teams) | Low (external customers) |

| Isolation Requirement | Soft (namespace + policies) | Hard (API server isolation) |

| Cluster-Admin Access | No (namespace-scoped) | Yes (cluster-admin in vCluster) |

| CRD Conflicts | Possible (shared API) | None (isolated API) |

| Resource Overhead | Minimal (~50MB webhook) | Moderate (~500MB-1GB per vCluster) |

| Cost per Tenant | Very low | Low-medium |

| Onboarding Speed | Instant (seconds) | Fast (1-2 minutes) |

| Kubernetes Version | Same as host | Independent per vCluster |

| Operator Support | Limited (namespace-scoped operators) | Full (cluster-scoped operators) |

| Network Isolation | NetworkPolicies | True network isolation |

| Multi-Cluster Tools | No (single cluster) | Yes (each vCluster is independent) |

| Compliance | Depends on requirements | Strong (API isolation) |

Use Capsule When:

- Tenants are internal teams in your organization

- Cost efficiency is critical

- You control what gets deployed

- Namespace-level isolation is sufficient

- Fast onboarding (sub-second) is important

- You want minimal operational overhead

Example: Platform team supporting 30 internal engineering teams with dev/staging/prod environments

Use vCluster When:

- Tenants are external customers or untrusted parties

- Tenants need cluster-admin privileges

- Tenants install custom operators and CRDs

- Different Kubernetes versions are required

- Compliance mandates API server isolation

- Tenants expect “their own cluster”

Example: SaaS platform offering managed Kubernetes to enterprise customers

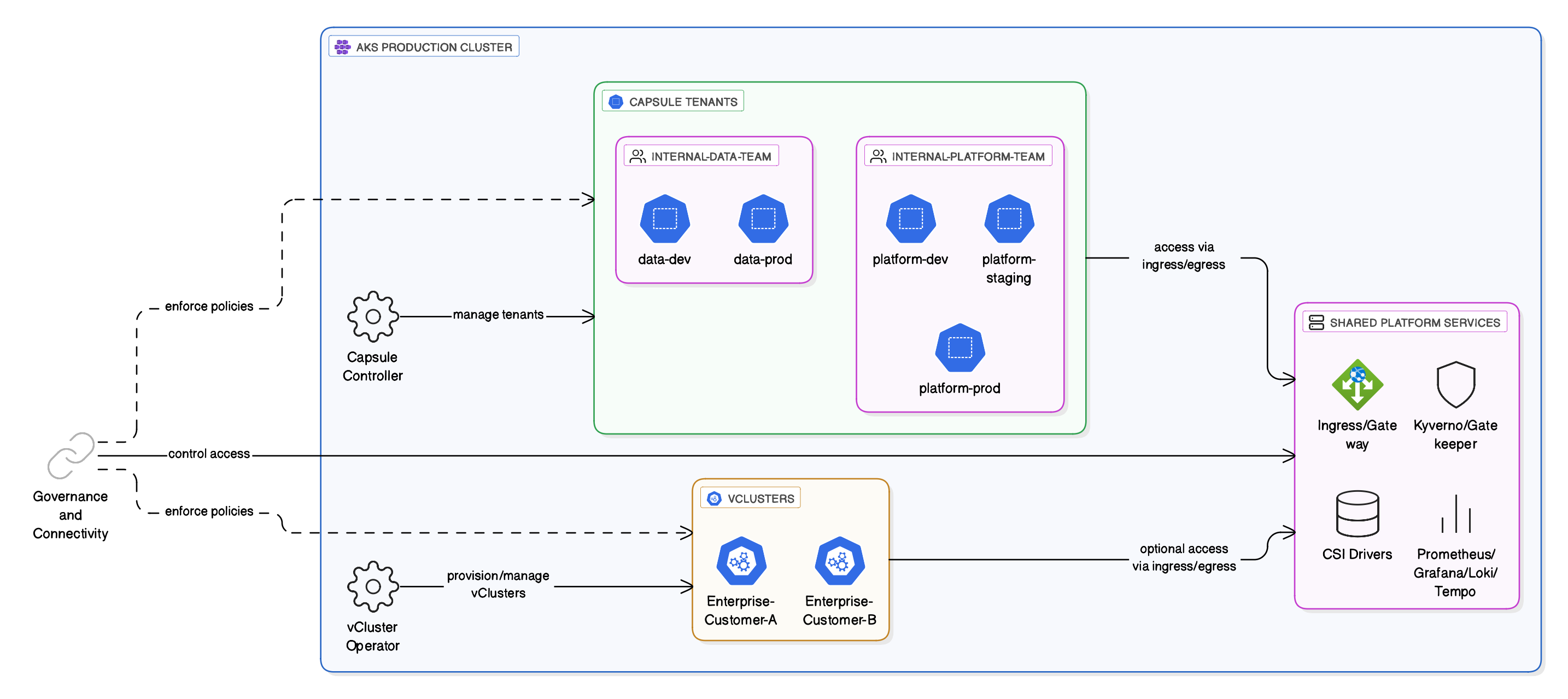

Hybrid Approach: Best of Both Worlds

In many organizations, you need both:

Internal teams use Capsule (lightweight, fast). External customers use vCluster (hard isolation). You get the cost benefits of Capsule where possible and the security of vCluster where required.

Practical Implementation: Self-Service with Backstage

One of the best ways to operationalize multi-tenancy is through an Internal Developer Portal like Backstage. Here’s how to enable self-service tenant provisioning:

Backstage Template for Capsule Tenant

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

apiVersion: scaffolder.backstage.io/v1beta3

kind: Template

metadata:

name: create-capsule-tenant

title: Create Development Tenant

description: Provision a new Capsule tenant for your team

spec:

owner: platform-team

type: tenant

parameters:

- title: Tenant Configuration

required:

- teamName

- ownerGroup

properties:

teamName:

type: string

description: Team name (lowercase, alphanumeric)

pattern: "^[a-z0-9-]+$"

ownerGroup:

type: string

description: Azure AD group for tenant owners

default: "platform-engineers"

namespaceQuota:

type: integer

description: Maximum namespaces allowed

default: 3

enum: [1, 3, 5, 10]

cpuQuota:

type: integer

description: Total CPU cores across all namespaces

default: 20

memoryQuota:

type: integer

description: Total memory (Gi) across all namespaces

default: 40

steps:

- id: render-tenant

name: Generate Tenant Manifest

action: fetch:template

input:

url: ./tenant-template

values:

teamName: $

ownerGroup: $

namespaceQuota: $

cpuQuota: $

memoryQuota: $

- id: publish-pr

name: Create Pull Request

action: publish:github:pull-request

input:

repoUrl: github.com?repo=platform-config&owner=myorg

branchName: tenant-$

title: "Add Capsule tenant: $"

description: |

## New Tenant Request

- **Team**: $

- **Owner Group**: $

- **Namespace Quota**: $

- **CPU Quota**: $ cores

- **Memory Quota**: $ Gi

output:

links:

- title: Pull Request

url: $

This template lets developers fill out a form in Backstage, and it generates a PR with the Capsule tenant YAML. After platform team approval and merge, ArgoCD applies it automatically.

Backstage Template for vCluster

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

apiVersion: scaffolder.backstage.io/v1beta3

kind: Template

metadata:

name: create-vcluster

title: Create Virtual Cluster

description: Provision a new virtual Kubernetes cluster for external customers

spec:

owner: platform-team

type: vcluster

parameters:

- title: Virtual Cluster Configuration

required:

- clusterName

- k8sVersion

properties:

clusterName:

type: string

description: Virtual cluster name

pattern: "^[a-z0-9-]+$"

k8sVersion:

type: string

description: Kubernetes version

default: "1.29"

enum: ["1.27", "1.28", "1.29", "1.30"]

distro:

type: string

description: Kubernetes distribution

default: k3s

enum: ["k3s", "k8s"]

highAvailability:

type: boolean

description: Enable HA control plane (3 replicas)

default: false

steps:

- id: create-namespace

name: Create Host Namespace

action: kubernetes:apply

input:

manifest: |

apiVersion: v1

kind: Namespace

metadata:

name: vcluster-$

labels:

tenant-type: vcluster

customer: $

- id: deploy-vcluster

name: Deploy vCluster via Helm

action: kubernetes:helm:install

input:

chart: vcluster

repo: https://charts.loft.sh

namespace: vcluster-$

releaseName: $

values:

controlPlane:

distro: $

vcluster:

image: rancher/k3s:v$.0-k3s1

syncer:

replicas: $

- id: extract-kubeconfig

name: Generate Kubeconfig

action: vcluster:kubeconfig

input:

cluster: $

namespace: vcluster-$

output:

text:

- title: Virtual Cluster Created

content: |

Your virtual cluster **$** is ready!

Connect with:

```bash

vcluster connect $ -n vcluster-$

```

With these templates, creating tenants becomes a 30-second self-service process instead of filing tickets and waiting days.

Monitoring and Cost Tracking

One critical aspect of multi-tenancy is cost visibility. You need to track resource usage per tenant to enable chargebacks or showbacks.

Prometheus Queries for Capsule

# CPU usage per tenant (aggregate across all namespaces)

sum(rate(container_cpu_usage_seconds_total{namespace=~"tenant-.*"}[5m])) by (label_capsule_clastix_io_tenant)

# Memory usage per tenant (aggregate across all namespaces)

sum(container_memory_working_set_bytes{namespace=~"tenant-.*"}) by (label_capsule_clastix_io_tenant)

# Total pod count per tenant (across all namespaces)

count(kube_pod_info{namespace=~"tenant-.*"}) by (label_capsule_clastix_io_tenant)

# Namespace count per tenant

count(kube_namespace_labels{label_capsule_clastix_io_tenant!=""}) by (label_capsule_clastix_io_tenant)

# Note: Capsule uses tenant-scoped quotas (aggregate), not per-namespace ResourceQuota objects

# To track quota utilization, monitor actual resource consumption against tenant spec:

# kubectl get tenant <name> -o jsonpath='{.spec.resourceQuotas.items[0].hard}'

Prometheus Queries for vCluster

# Control plane CPU per vCluster

sum(rate(container_cpu_usage_seconds_total{pod=~".*-vcluster-0"}[5m])) by (namespace)

# Control plane memory per vCluster

sum(container_memory_working_set_bytes{pod=~".*-vcluster-0"}) by (namespace)

# Syncer resource usage

sum(container_memory_working_set_bytes{container="syncer"}) by (namespace)

# Workload pods per vCluster (synced to host)

count(kube_pod_info{namespace=~"vcluster-.*"}) by (namespace)

Grafana Dashboard Panels

Create dashboards with these panels:

- Tenant List: Table showing all tenants with namespace count, pod count, CPU/memory usage

- Cost per Tenant: Calculated based on resource usage unit cost

- Quota Utilization: Heatmap showing tenants approaching limits

- Top Resource Consumers: Bar chart of highest CPU/memory tenants

- vCluster Control Plane Health: Status of each vCluster API server

I’ve found that visibility drives better behavior. Once teams can see their resource consumption in real-time, they start optimizing. Chargebacks create accountability.

Security Considerations

Capsule Security Best Practices

- Always Enable Network Policies: Don’t rely on namespace isolation alone. Use Capsule’s automatic NetworkPolicy injection to enforce zero-trust networking:

1

2

3

4

5

6

7

8

9

10

spec:

networkPolicies:

items:

- policyTypes: [Ingress, Egress]

podSelector: {}

ingress:

- from:

- namespaceSelector:

matchLabels:

capsule.clastix.io/tenant: "platform-engineering"

- Enforce Pod Security Standards: Use Kubernetes Pod Security Standards to prevent privilege escalation:

1

2

3

4

5

6

7

spec:

podOptions:

additionalMetadata:

labels:

pod-security.kubernetes.io/enforce: restricted

pod-security.kubernetes.io/audit: restricted

pod-security.kubernetes.io/warn: restricted

- Restrict Ingress Hostnames: Prevent tenants from claiming arbitrary domains:

1

2

3

4

spec:

ingressOptions:

allowedHostnames:

allowedRegex: "^.*\\.\\.example\\.com$"

- Limit Storage Classes: Only allow specific storage tiers:

1

2

3

4

5

spec:

storageClasses:

allowed:

- standard

# Block premium storage for cost control

- Container Registry Restrictions: Prevent pulling images from untrusted registries:

1

2

3

4

5

6

7

8

spec:

containerRegistries:

allowed:

- "mycompany.azurecr.io"

- "ghcr.io" # GitHub Container Registry

- "quay.io" # Quay.io registry

# Note: Use registries that match full image paths

# Pattern "docker.io/library" requires short names like "nginx:latest"

vCluster Security Best Practices

- Isolate Control Planes: Run vCluster control planes on dedicated nodes to prevent resource contention:

1

2

3

4

5

6

7

8

9

10

# Node affinity for vCluster control planes

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: workload-type

operator: In

values:

- vcluster-control-plane

- Restrict Syncer RBAC: The syncer needs host cluster permissions, but limit them:

1

2

3

4

5

6

7

8

9

10

# Custom RBAC for syncer (instead of cluster-admin)

sync:

rbac:

clusterRole:

create: true

rules:

- apiGroups: [""]

resources: ["pods", "services", "endpoints"]

verbs: ["get", "list", "watch", "create", "update", "delete"]

# Don't grant cluster-wide secret access

- Enable Virtual Cluster Audit Logging: Track what tenants do inside their vCluster:

1

2

3

4

5

6

7

8

9

10

11

controlPlane:

advanced:

virtualScheduler:

enabled: true

backingStore:

etcd:

deploy:

enabled: true

extraArgs:

- --audit-log-path=/var/log/kubernetes/audit.log

- --audit-policy-file=/etc/kubernetes/audit-policy.yaml

- Network Policies on Host: Even though vClusters have their own policies, enforce host-level policies too:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: vcluster-isolation

namespace: vcluster-customer-a

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

ingress:

- from:

- podSelector: {} # Only allow intra-namespace traffic

egress:

- to:

- podSelector: {}

Troubleshooting Common Issues

Capsule Issues

Problem: Tenant owner can’t create namespaces

1

2

3

4

5

6

7

8

# Check if user/group is correctly assigned as owner

kubectl get tenant my-tenant -o yaml | grep -A5 owners

# Check if namespace quota is exceeded

kubectl get tenant my-tenant -o jsonpath='{.status.namespaces}' | wc -w

# Check Capsule webhook logs

kubectl logs -n capsule-system deployment/capsule-controller-manager

Problem: Resource quota preventing pod creation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# Check tenant-level aggregate quota (Capsule uses tenant-scoped quotas)

kubectl describe tenant my-tenant

# Check current resource usage across all tenant namespaces

kubectl get pods -A -l capsule.clastix.io/tenant=my-tenant \

-o custom-columns=NAMESPACE:.metadata.namespace,NAME:.metadata.name,\

CPU:.spec.containers[*].resources.requests.cpu,\

MEMORY:.spec.containers[*].resources.requests.memory

# View tenant quota configuration

kubectl get tenant my-tenant -o jsonpath='{.spec.resourceQuotas}'

# Note: Capsule does NOT create ResourceQuota resources per namespace

# Quotas are enforced at the tenant level (aggregate across all namespaces)

Problem: Network policies blocking legitimate traffic

1

2

3

4

5

6

7

8

9

10

11

12

# Review tenant network policy configuration

# Note: Capsule enforces these via webhooks, they may not appear as NetworkPolicy resources

kubectl get tenant my-tenant -o jsonpath='{.spec.networkPolicies}'

# Test connectivity from a pod

kubectl run test-pod --rm -it --image=nicolaka/netshoot -n tenant-namespace -- curl service.other-namespace

# Check if NetworkPolicy resources exist (may be created by other tools)

kubectl get networkpolicy -n tenant-namespace

# Check Capsule controller logs for webhook denials

kubectl logs -n capsule-system deployment/capsule-controller-manager | grep -i network

vCluster Issues

Problem: Can’t connect to vCluster

1

2

3

4

5

6

7

8

9

10

11

# Check if vCluster pod is running

kubectl get pods -n vcluster-my-cluster

# Check vCluster logs

kubectl logs -n vcluster-my-cluster statefulset/my-cluster-vcluster

# Verify service endpoint

kubectl get svc -n vcluster-my-cluster

# Reconnect kubeconfig

vcluster connect my-cluster -n vcluster-my-cluster --update-current

Problem: Pods not syncing to host cluster

1

2

3

4

5

6

7

8

# Check syncer logs

kubectl logs -n vcluster-my-cluster deployment/my-cluster -c syncer

# Verify sync config

helm get values my-cluster -n vcluster-my-cluster

# Check syncer RBAC permissions

kubectl get clusterrole vcluster-my-cluster

Problem: vCluster API server crashing (OOMKilled)

1

2

3

4

5

6

7

# Check resource limits

kubectl get statefulset -n vcluster-my-cluster my-cluster-vcluster -o yaml | grep -A5 resources

# Increase memory limit

helm upgrade my-cluster loft/vcluster \

-n vcluster-my-cluster \

--set vcluster.resources.limits.memory=4Gi

Migration Strategies

Migrating from Separate Clusters to Capsule

If you’re currently running separate clusters per team and want to consolidate:

- Create Capsule tenant matching the team’s requirements

- Export resources from the source cluster:

1

kubectl get all,cm,secret,pvc,ingress -n production -o yaml > team-a-export.yaml

- Create namespace in Capsule tenant

- Import resources:

1

kubectl apply -f team-a-export.yaml -n team-a-prod

- Update DNS to point to new cluster ingress

- Validate and decommission old cluster

Migrating from Capsule to vCluster

If a tenant needs more isolation (e.g., becoming an external customer):

- Create vCluster:

1

vcluster create team-a --namespace vcluster-team-a - Connect to vCluster:

1

vcluster connect team-a --namespace vcluster-team-a - Export from Capsule namespace:

1

kubectl get all,cm,secret,pvc -n team-a-prod -o yaml > export.yaml

- Import to vCluster (now targeting virtual cluster):

1

kubectl apply -f export.yaml - Grant customer the vCluster kubeconfig

- Decommission Capsule tenant

Cost Analysis: Real Numbers

Let’s compare the total cost of ownership for 50 tenants:

Scenario 1: Separate AKS Clusters (Before)

- 50 AKS clusters 120/month (small cluster) = 6,000/month

- Operations time: ~40 hours/month (patching, monitoring, upgrades)

- Total annual cost: 72,000 + ops overhead

Scenario 2: Capsule on 2 Large Clusters

- 2 AKS clusters (production + non-prod) 600/month = 1,200/month

- Capsule overhead: Negligible (~5/month)

- Operations time: ~5 hours/month

- Total annual cost: 14,400 + minimal ops overhead

- Savings: 80%

Scenario 3: vCluster (10 per Host Cluster)

- 5 AKS host clusters 400/month = 2,000/month

- vCluster overhead: 50 vClusters × 1GB RAM × 0.05/GB/month = 250/month

- Operations time: ~10 hours/month

- Total annual cost: 27,000 + low ops overhead

- Savings: 62%

Scenario 4: Hybrid (Capsule + vCluster)

- 2 AKS clusters for Capsule (30 internal tenants) = 1,200/month

- 2 AKS clusters for vCluster (20 external customers) = 1,000/month

- Total annual cost: 26,400

- Savings: 63% with better isolation model

The savings are significant, but the operational improvement is even bigger. Going from managing 50 clusters to managing 2-5 is transformative for platform teams.

Hands-On Demo

I’ve built a complete demo that shows both Capsule and vCluster in action on AKS:

What’s included:

- Terraform to provision AKS cluster

- Capsule installation and example tenants

- vCluster deployment with K3s and vanilla K8s

- Backstage templates for self-service provisioning

- ArgoCD ApplicationSets for GitOps management

- Monitoring dashboards (Prometheus + Grafana)

- Cost tracking scripts

- Security policies and best practices

- Migration scripts (separate clusters → Capsule → vCluster)

The demo uses Taskfile for automation:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# Quick start

git clone https://github.com/NoNickeD/k8s-multi-tenancy-demo.git

cd k8s-multi-tenancy-demo

# Deploy everything

task deploy-all

# Create Capsule tenant

task capsule:create-tenant TENANT=my-team

# Create vCluster

task vcluster:create CLUSTER=customer-a

# Cleanup

task cleanup

This gives you a working multi-tenancy platform in about 15 minutes.

Conclusion

Multi-tenancy on Kubernetes isn’t one-size-fits-all. The isolation model you choose should match your trust boundaries, compliance requirements, and operational capacity.

Capsule gives you lightweight, cost-effective multi-tenancy for internal teams. It’s fast, efficient, and operationally simple. Perfect for most platform engineering use cases.

vCluster provides hard isolation with virtual clusters. It’s ideal for external customers, compliance scenarios, or cases where tenants need cluster-admin access. You get the security of separate clusters without the operational burden.

In many organizations, you’ll use both. Internal teams on Capsule. External customers on vCluster. Each tool plays to its strengths.

The key is having a self-service platform that abstracts the complexity. Whether you use Backstage, a custom portal, or GitOps automation, make tenant provisioning fast and repeatable. That’s how you scale from 5 tenants to 500 without drowning in operational toil.

Multi-tenancy isn’t about cramming more workloads onto clusters to save money. It’s about building a platform that scales efficiently while maintaining security, isolation, and developer experience. Capsule and vCluster are the tools that make that possible.